Open-Source Unity Catalog and How it Empowers Open Data Lakehouse

In this article, we will explore unity catalog, its high-level architecture, components, installation and setup, current and future capabilities. We will also explain how it is going play major role as key component of building modern enterprise data architecture based on open standards, interoperability with leading open table format Delta and Iceberg and integration with popular compute engine Apache Spark.

Unity Catalog and its Evolution

Unity Catalog is the first industry standard multi-modal, multi-engine, multi-format open-source catalog — for Data and AI.

This allows data to be quickly discovered and make it accessible to all organizational stake holders for all aspects of data analytics including generating AI and machine learning. It is kind of searchable index of organization data available with information about data format, structure, location and usage. It thus adds semantic value to massive volume of data where it is highly challenging to identify the underlying data of an organization.

Databricks, a leading Data Analytics platform player, implemented Unity Catalog for its own platform in last couple of years and was open sourced mid of year 2024. Unity Catalog is currently a sandbox project with LF AI and Data Foundation (part of the Linux Foundation).

Purpose behind Open Sourcing Unity Catalog

Data Lakehouse architecture has gained wider adoption in many organizations as it has capabilities of both data warehouse and Data Lake. Huge investments are being made in this kind of solutions and open table format like Apache Iceberg, Hudi were got tremendous momentum and has become industry standards. However, lack of non-availability of open-source data catalog was restricting the overall open data lake house architecture. Unity Calaog being open source now, this restriction will be unblocked.

Making open-source unity catalog has many advantages too. Open-source unity catalog’s integration with other tools can be unlock many possibilities and opportunity within open-source community (e.g. Snowflake has added a spring in this stride by open sourcing another data catalog. Reference https://www.snowflake.com/en/blog/introducing-polaris-catalog/)

With two of leading analytics leaders open sourcing their catalog it’s an indication of importance of Open Data Lakehouse architecture in the industry and this just going to intensify the battle between the key players in this space.

Date Lakehouse Architecture & Implementation Challenges

Data Lakehouse architecture brings capabilities of data lake and data warehouse together. Here is reference architecture diagram explaining high level data Lakehouse architecture.

To achieve capabilities like that of databases to Data Lake, open table formats (Delta Lake, Apache Iceberg, or Apache Hudi) were created, these open table formats are the foundational block of the Lakehouse to enable ACID transactions. This act as transactional metadata layer on top of underlying data lake storage.

This metadata layer is a place to implement governance features such as access control and audit logging. A metadata layer can check whether a client is allowed to access a table before granting it credentials to read the raw data in the table from a cloud object store and can reliably log all accesses.

For data governance, a data catalog is needed for easily and securely managing all the data and AI assets. It helps organizations to organize data, manage access privileges, and ensure compliance with data regulations.

Challenges

Interoperability with different formats and compute challenges

As organizations started adopting data Lakehouse architecture, data was stored in different table formats and file formats. Also, it was processed using different compute engines and soon interoperability between different formats and compute engine became key challenge and since then interoperability has become important architectural characteristics of modern data enterprise architecture.

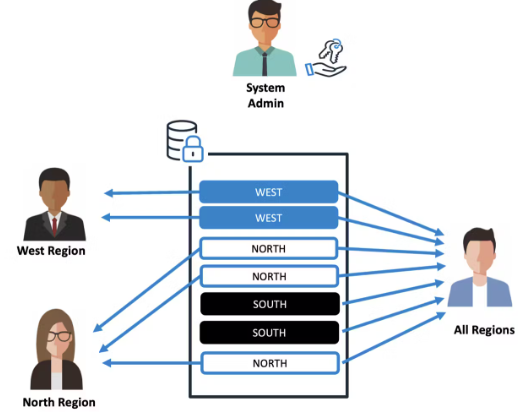

Need for Unified Data Governance

Different tools provide data governance however approach was not unified leading to non-flexible and complex data controls as each tool has specific and limited controls. Most of the tools were proprietary solutions leading to vendor lock in. This is where the role of a universal data catalog comes in, as it provides a centralized metadata repository for an organization’s data assets.

What is Open-Source Unity Catalog?

Unity Catalog is first Open, Multimodal Catalog open source for Data & AI. Let’s understand this in detail below:

Multi-format support: Data stored in modern enterprise data architecture can be in Apache iceberg, Delta, Parquet, csv format. Unity catalog offers support for these formats. We will see for Delta and Iceberg in this blog in details

Multi-engine support: Modern data architectures are not restricted to using single compute engine for processing data. There can be Apache Spark, Trino. Unity catalog Api support multi engine making it the ETLs flexible and simplified.

Multimodal: In the era of generative AI, there is also need of a centralize catalog for AI models, ml models and by supporting this Unity Catalog is making it more versatile futuristics not only data engineering and analytical solutions but also for AL/ML solutions.

Open-source API and implementation: OpenAPI spec and OSS implementation (Apache 2.0 license). It is also compatible with Apache Hive metastore API and Apache Iceberg’s REST catalog API. Unity Catalog is currently a sandbox project with LF AI and Data Foundation (part of the Linux Foundation).

Unity Catalog Components

Let’s understand the various levels of unity catalog structure.

There are basically three levels Level 1 is at top level, and it has concept of catalog. There can be multiple catalogs in an organization which can be either business units or other high-level categories of organization.

Next Level 2 is schema which next level granular logical categories. A schema can represent a single project or use case. Please do not confuse this with table or database schema.

Lastly level 3 is at the bottom of the structure is any data object which can be stored for future processing and analysis. Unity Catalog supports many kinds of assets, including tables, volumes, models, and functions.

Now let’s explore the various components of Unity catalog

- Unity Catalog Server

This is the main component and is developed in JAVA as microservice. It provides REST API for all operations related to Unity Catalog. This server needs to be installed on the VM/machine and configured with backend database either with MySQL or PostgreSQL.

Post installation this server needs to be started and is expected to continuously be running process. If this is down, unity catalog will be not available, and applications can fail.

As of now, this single point of failure thus needs to have manual high availability set up.

2. Unity Catalog Backend Database

Unity Catalog needs a RDBMS which is primarily used for storing information about catalogs, schema and metadata about catalog, schema assets etc. This database is not used storing the actual data. The actual data can be in external storage like Cloud (AWS, Azure or Cloud). This is configurable in unity catalog server.

As of now, PostgreSQL and MySQL is supported and while setting this up for exploration, we configured MySQL as backend database. Post installation of MySQL, “ucdb” database is created along with associated 11 tables when unity catalog server is started for first time. These 11 tables capture information about catalogs, schema columns etc.

Here is list of 11 tables. We will explore how it captures information about meta data.

Here is the metadata about sample catalog, schemas created which is captured database.

Unity catalog schemas

Unity catalogs tables

3. Unity Catalog APIs

Rest API provides core functionalities like managing catalogs, schema, tables. Unity Catalog as of now, support list, get details, create, update, delete operations for catalogs, schemas, tables, volumes.

This will be enhanced, and more features are expected to be added in upcoming releases.

4. Unity Catalog UI

The Unity Catalog UI allows you to interact with a Unity Catalog server to view or create data and AI assets. This release (v 0.2) has very limited functionality like it only allows creation of catalog and schema whereas tables cannot be created from UI. When setup locally, you can access the UI using URL http://localhost:3000/data/mycatalog

5. Unity Catalog CLI

The CLI allows users to interact with a Unity Catalog server to create and manage catalogs, schemas, tables across different formats (DELTA, UNIFORM, PARQUET, JSON, and CSV), volumes with unstructured data, and functions.

Following shows a list of catalog using command line:

To list the tables under a catalog:

6. Unity Catalog API Documentation Service

This documentation service has complete information about supported Unity catalog APIs, parameters and return types that are available for each of the rest APIs.

From local setup, you can access the same using URL: http://localhost:8080/docs/#/

Just to add that Debugging can also be done using documentation service. Apis can be triggered, and response can also be seen. This is much beneficial from development perspective.

High Level Unity Catalog Installation Steps

As of release v0.2, the unity catalog installation process is bit complex and manual. We need to download(clone) the code base from the repository and build it prepare the tar. Once tar is ready, we can upload into folder of VM/machine where it needs to be deployed and then it needs to be configured. There is no tar available on official website.

Pre-Requisite

Install Below Software Before Starting Installation of Unity Catalog

2. Scala Build Tool SBT (https://www.scala-sbt.org/1.x/docs/Installing-sbt-on-Linux.html)

3. GIT version control (https://git-scm.com/downloads/linux)

4. Installation of MySQL

sudo dnf install mysql-server

sudo systemctl start mysqld.service

sudo systemctl enable mysqld.service

sudo mysql_secure_installation

5. Node JS ( https://nodejs.org/en/download)

6. Yarn (https://classic.yarnpkg.com/lang/en/docs/install/#windows-stable)

Installing Unity Catalog

1. Download code from GIT repository using git clone

Create a directory and execute below command to download the code

git clone https://github.com/unitycatalog/unitycatalog.git –branch v0.2.0 –single-branch

Here is how the source code directory looks like (unitycatalog)

2. Package code (create tar ball)

Use command: build/sbt createTarball

After successfully build you check below message on console

3. Create separate directory where you want to deploy unity catalog and copy tar in that deployment directory.

4. Unzip the tar

For unity catalog configuration, please refer https://docs.unitycatalog.io/deployment/ for more details about folder structure.

5. Configure mysql as back end database.

For mysql configuration, please refer https://docs.unitycatalog.io/deployment/

Download jdbc driver for mysql and put in jars folder.

curl -O https://dev.mysql.com/get/Downloads/Connector-J/mysql-connector-j-9.1.0.tar.gz

curl -O https://repo1.maven.org/maven2/com/mysql/mysql-connector-j/8.2.0/mysql-connector-j-8.2.0.jar

Starting Unity Catalog Server

Command: nohup bin/start-uc-server > uc.out 2> uc.err &

We are running the server in background. You can confirm by checking the uc.out file it will look below.

You can refer REST API documentation service here URL : http://localhost:8080/docs/#/

For starting UI, here are steps

1. cd /ui

2. yarn install ( this in only needed for first time )

3. yarn start

URL for UI: http://localhost:3000/

How is Unity Catalog Help in Solving Challenges of Data Lakehouse?

As we have seen, interoperability with different format, compute engines and governance are the key challenges.

Interoperability is mostly resolved. However, a lot of work needs to be done in governance capabilities which we think will be taken care in upcoming releases.

Let’s understand how unity catalog integrates with two of widely used technology solutions viz. Spark compute engine and Iceberg Lakehouse format below.

Apache Spark and Unity Catalog

Apache Spark™ is a multi-language engine for executing data engineering, data science, and machine learning on single-node machines or clusters.

Unity Catalog provides capabilities for interoperability with different lakehouse formats (like Apache Iceberg and Delta Lake) and provides secured access to data stored in Unity catalog in controlled manner.

Here are the simple steps to follow:

1. Start the unity catalog server once you have done the installation.

2. Create your own catalog either from Unity catalog UI or from Unity Catalog CLI

3. In Spark submit command specify the unity catalog server http://<YOUR_UNITY_CATALOG_SERVER_IP>:8080 and <YOUR_UNITY_CATALOG_NAME>

4. In spark application you can access the schema and tables in the specific catalog and perform crud operations.

Here is sample spark submit command

Unity Catalog and Iceberg Integration

As of now, Unity catalog provides Iceberg integration using UniForm Delta format which can be used with Delta and Iceberg. UniForm tables are Delta tables with both Delta Lake and Iceberg metadata. UniForm tables can easily be read by Delta or Iceberg clients.

Delta Tables with Uniform enabled can be accessed via Iceberg REST Catalog. The Iceberg REST Catalog is served at http://localhost:8080/api/2.1/unity-catalog/iceberg/.

Documentation Reference: https://docs.unitycatalog.io/usage/tables/uniform/

Note: Unity Catalog can also host/sync non-Delta tables when used along with 3rd party libraries like Apache XTable (erstwhile OneTable) once the Delta metadata layer is created on top of underlying data. Please refer https://xtable.apache.org/docs/unity-catalog/ to know more on this.

Roadmap of Upcoming Features and Releases

The capability and features Unity catalog in the current released version v0.2 are very limited and are in primitive stage of the product. As of now, the unity catalog has capabilities related to tables which are highlighted in screen shot. Iceberg table support will be available in upcoming releases.

Here is the detailed roadmap for tables capabilities.

We need to wait for governance related important and critical features like RBAC, Auditing, lineage till V0.5 and here are those important features highlighted in yellow.

Also to make this open-source version enterprise grade production ready below features also needs to be released.

Our Final Thoughts

With open sourcing of Unity Catalog and Polaris by Databricks and Snowflake, we start to see a greater competition between behemoths in the analytics market and this eventually bolstering overall growth opportunities in the Lakehouse space. As Databricks and Snowflake have an outsized impact on the investments of nearly every other company in the data and analytics space, Lakehouse users across the community will get a chance reap the rewards as the Lakehouse space perhaps attained its maturity.

As several tech giants (e.g. AWS, Nvidia etc.) in analytics space have expressed their interest in Unity Catalog or for that matter other OSS catalogs, we are poised to integrate and innovate on top of this foundation in coming days.

Thanks for reading. In case you want to share your case studies or want to connect, please ping me via LinkedIn